National Undergraduate Electronic Design Contest

Overview

In the National Undergraduate Electronic Design Contest (Aug 2023), our team designed and developed a laser motion control and auto-tracking system, earning a provincial second prize. This competition required participants to build an innovative system under rigorous time constraints. Our solution featured precise motion control and real-time tracking using advanced image processing and feedback algorithms.

Results

- Achievement: Provincial Second Prize, ranked second among teams in our school.

- Performance Metrics:

- Successfully implemented precise motion control with an average error of 1.1 cm during testing.

- Achieved stable laser tracking performance with a maximum tracking error of 3.6 cm under moderate speeds.

- Presentation: Delivered a working prototype meeting the core requirements under competitive time constraints.

[Report PDF (Chinese version)]

Technical Details

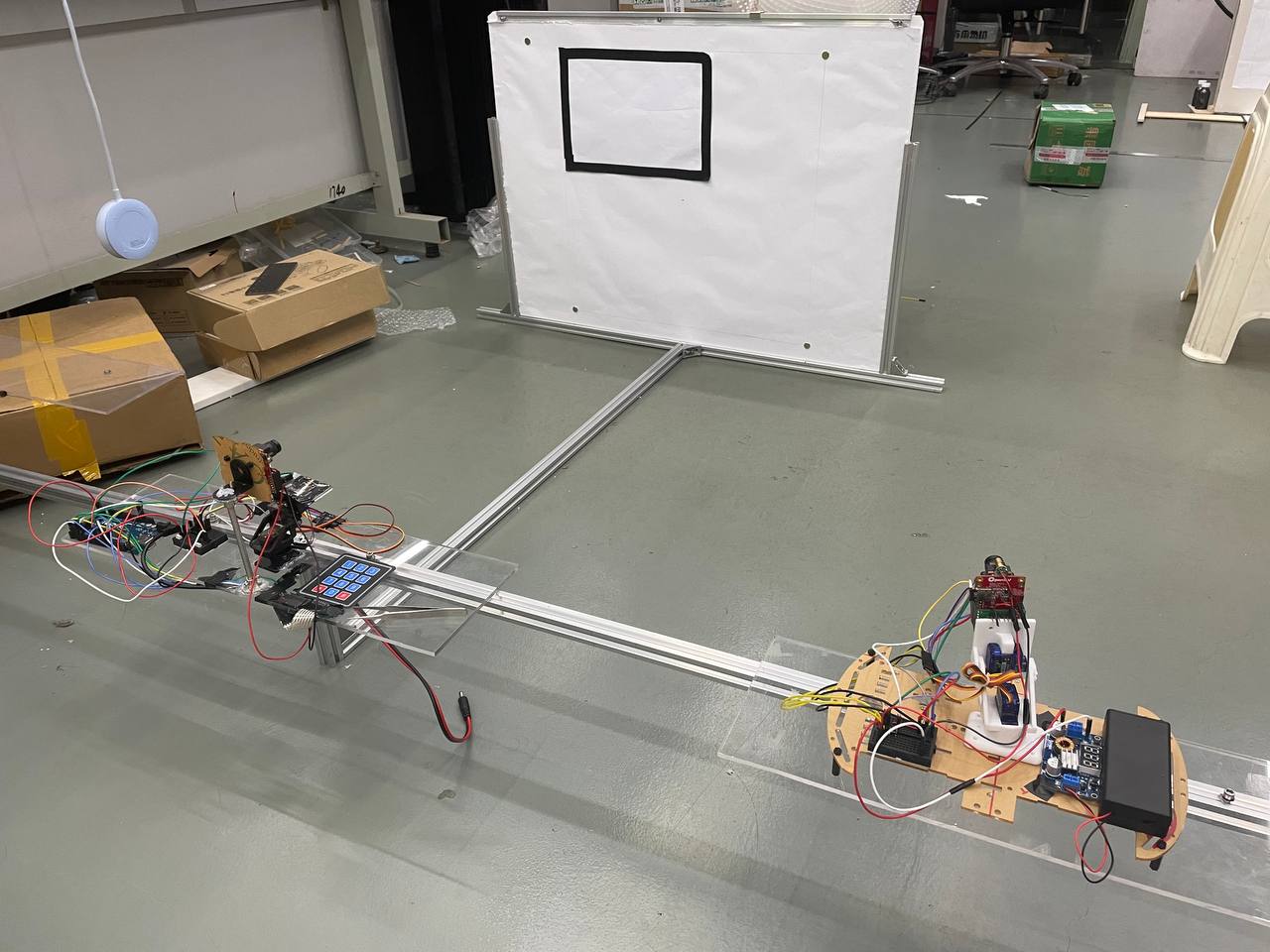

- System Architecture:

- Two independent servo-driven gimbals controlled by an Arduino Mega2560 microcontroller.

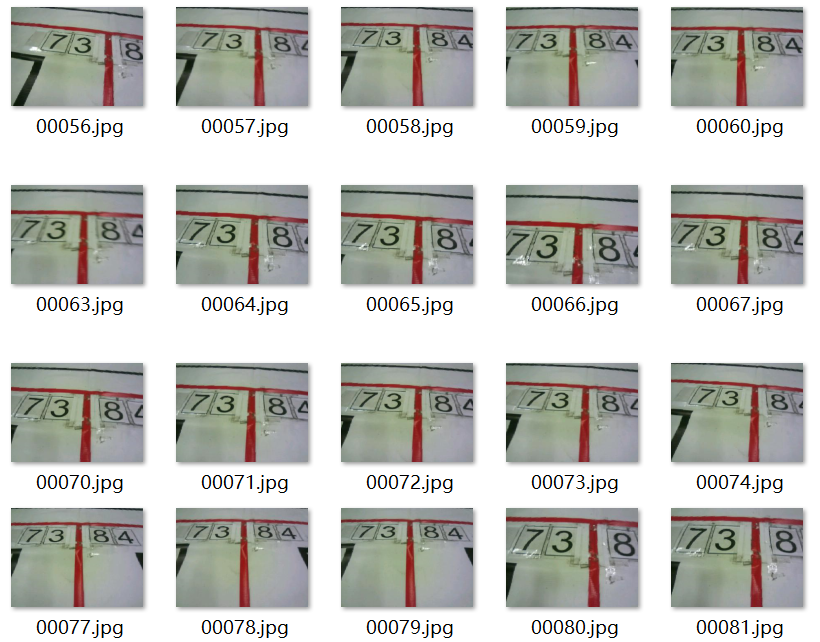

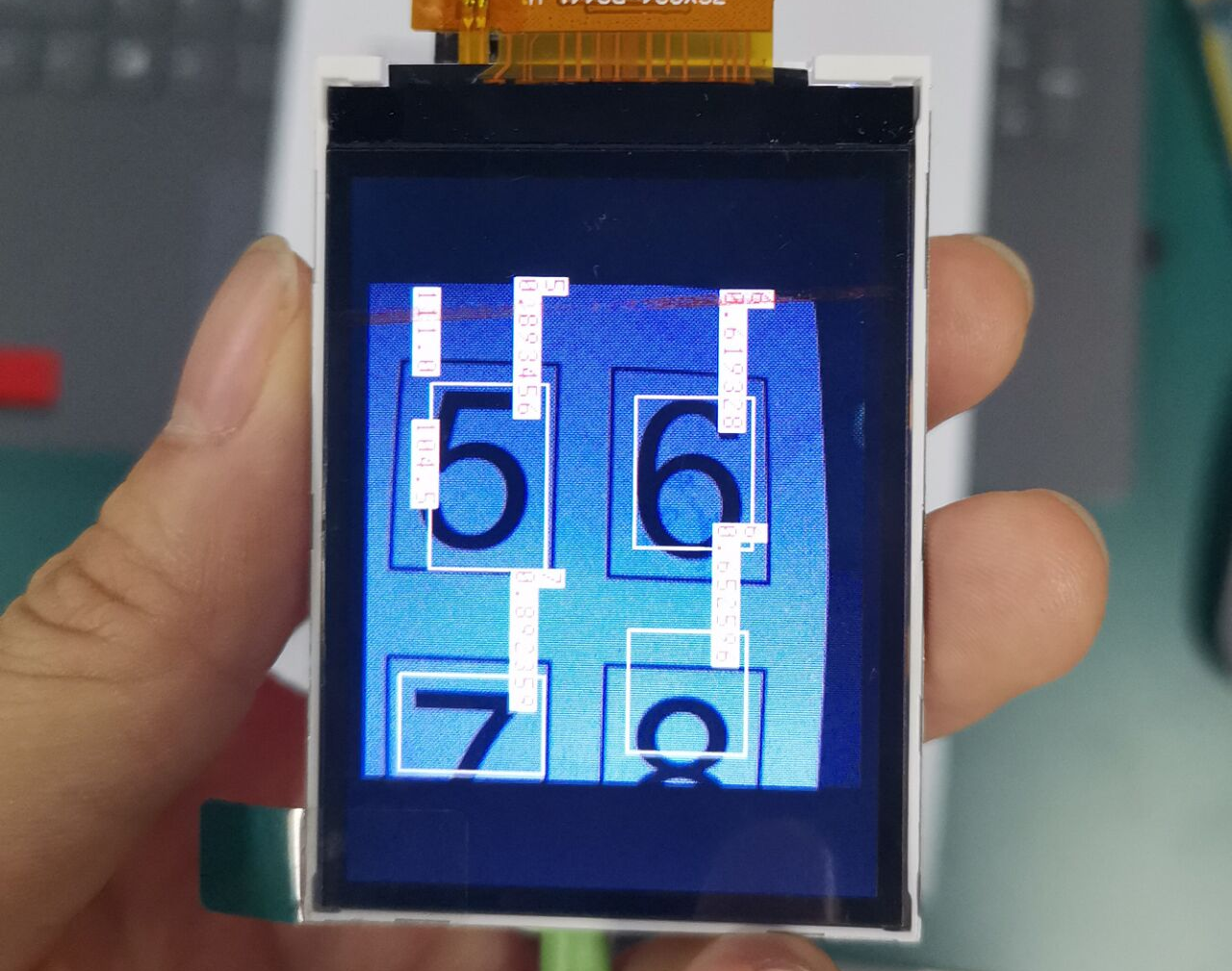

- Image processing conducted via OpenMV H7 to identify and track red and green laser spots.

- Algorithms:

- Coordinate Mapping: Developed a nonlinear mapping between screen coordinates and gimbal angles using MATLAB-based regression.

- Motion Control: Employed discrete PID algorithms for trajectory interpolation and servo adjustments.

- Tracking: Enhanced tracking accuracy with Kalman filtering to predict and correct laser positions.

- Challenges:

- Overcame mechanical inaccuracies by calibrating servo feedback with additional correction factors and replacing faulty servos to improve trajectory precision.

- Improved image processing robustness under varying light conditions through optimized exposure settings and LAB color space filtering.

- Enhanced tracking success rates for high-speed laser movements by adjusting control frequencies and refining PID parameters for smoother tracking.

Reflection and Insights

This competition was a test of endurance, adaptability, and teamwork. The intense four-day schedule required rapid problem-solving and collaboration. The experience highlighted the importance of robust system design and precise calibration in achieving high-performance results. It also reinforced the value of integrating advanced algorithms, such as Kalman filtering, to address real-world constraints.

Team and Role

- Team: A three-member team collaboratively handled hardware design, software development, and optimization.

- My Role:

- Led the implementation of image processing algorithms and Kalman filtering.

- Developed and tested the PID control system for trajectory tracking.

- Conducted system debugging and parameter tuning under competition constraints.